Boseong Jeon

🤖 I deliver a full-stack robot autonomy.

1. Education

- 2013-2015: Started from architecture and architectural engineering @ Seoul National University (opens in a new tab).

- 2015-2017: BS in mechanical & aerospace engineering @ Seoul National University

- 2017-2022: PhD in Robotics in Lab for Autonomous Robotics Research (opens in a new tab), advisor: H. Jin Kim (opens in a new tab).

5 yrs graduation - 2022-Current: Staff engineer @ Samsung Research

2. Core Research

A. Hierarchical Motion Planning for Active Vision

Designed a real-time motion planning framework for generating high-quality robot trajectories that balance multiple complex and often conflicting objectives. Specifically, I developed an aerial chasing system that maintains target visibility, ensures safety, and optimizes travel efficiency simultaneously. All algorithms were deployed on onboard computers, and I independently built the entire system, including the hardware. Watch the demo on Youtube (opens in a new tab) 🚀

Academic Publications (1st Author Only)

- Online trajectory generation of a mav for chasing a moving target in 3d dense environments (2019 IROS) 📄 (opens in a new tab)

- Integrated motion planner for real-time aerial videography with a drone in a dense environment (2020 ICRA) 📄 (opens in a new tab)

- Detection-aware trajectory generation for a drone cinematographer (2020 IROS) 📄 (opens in a new tab)

- Autonomous aerial dual-target following among obstacles (IEEE Access) 📄 (opens in a new tab)

- Aerial chasing of a dynamic target in complex environments (IJCAS) 📄 (opens in a new tab)

B. On-device generative AI (Galaxy AI)

Led the development of an on-device generative image model deployed on Galaxy S25, delivering performance comparable to Google’s Imagen 3 cloud model. I was responsible for the entire training and data pipeline, including: 1) LoRA-based adaptation of a large foundation model, 2) Downstream fine-tuning for image generation tasks, and 3) Reward modeling and fine-tuning prior to quantization for efficient on-device deployment. Special focus on enabling a single model to perform multiple tasks and enhance output quality, despite severe constraints on memory and computation time.

Technical Reports

I wrote relevant articles. Please note that our company does not allocate dedicated time for research or paper writing.

- ControlFill: Spatially Adjustable Image Inpainting from Prompt Learning (2024 Samsung Paper Award) 📄 (opens in a new tab)

- CrimEdit: Controllable Editing for Object Removal, Insertion, and Movement (2025, under review) 📄 (opens in a new tab)

- SPG: Improving Motion Diffusion by Smooth Perturbation Guidance (2025 Arxiv) 📄 (opens in a new tab)

C. Robot Foundation Model and On-device VLAs (A+B)

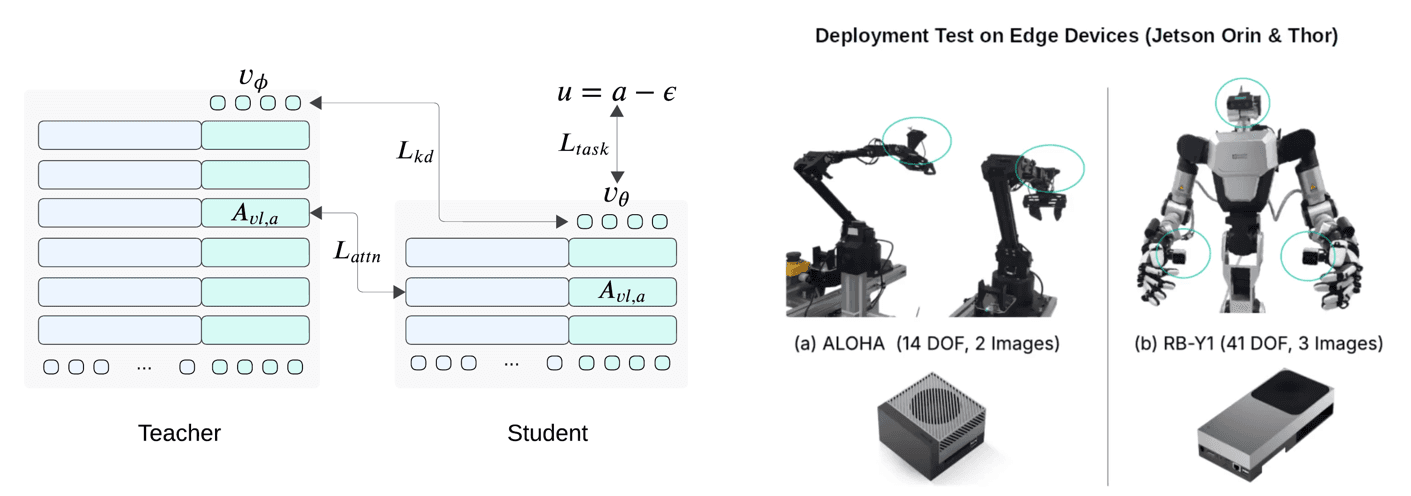

As of now, I am working on the acceleration of VLAs by either knowledge distillation or step distillation in case of VLAs with diffusion heads. Based on the methods, I deployed robots on multiple edge devices.

Technical Reports

- Shallow-π: Knowledge Distillation for Flow-based VLAs 🖥️ (opens in a new tab)

3. Past Projects

Task Allocation for Rescue Robots (2017~2019)

Performed research with KIRO. Given a fleet of atypical robots, assign the task sequence to each robot considering their mobility and mapping information. Developed auction algorithm incorporating A* optimal connecting path.

Autonomous Driving in Unstructured Environments (2019~2020)

Developed a driving stack for unstructured roads using sensors such as LiDAR and GPS. With KETI, we conducted real-car testing. The stack includes ground-based mapping, moving object prediction, and hierarchical motion planning composed of safe driving area estimation and an MPC reference tracker. YouTube

traj_gen: A Continuous Trajectory Generation with Simple API (230+ Stars)

traj_gen is a continuous trajectory generation package where high-order derivatives along the trajectory are minimized while satisfying waypoints (equality) and axis-parallel box constraints (inequality). The objective and constraints are formulated in quadratic programming (QP) to ensure real-time performance. GitHub

Incubation Project for Advancing Navigation of Samsung Robot Platform (SRP) (2023)

As part of an initiative to extend the navigation tree of SRP with forward–backward motion and rectangular collision modeling, I developed a reference pose trajectory and its corresponding safe corridor using a variant of the hybrid A* algorithm (YouTube).

A real-robot demonstration video is available here.